By Yian Ma

Data science is fueling the change of our society. It is altering the ways we perceive and participate in industries such as mass media, politics, finance, retail business, manufacturing, and health care. On a more institutional level, it is also changing the ways corporations and organizations operate. Many entrepreneurs agree that a major difference between a modern internet-age company and a traditional one lies in their decision making processes. In modern companies, decisions are pushed down to the level of data scientists, engineers, and product managers as opposed to a traditional top-down approach where all major decisions are initiated by the chief ranking officers.

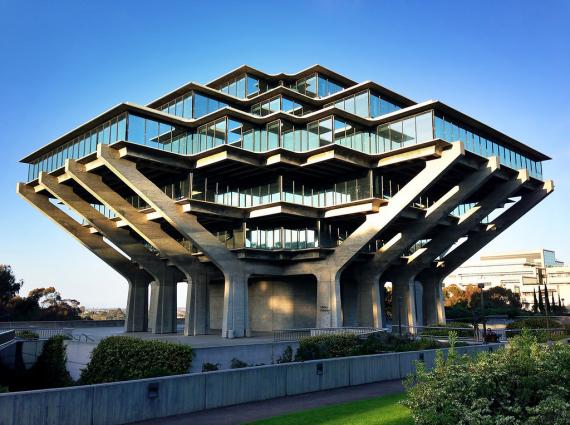

This rising tide of data science in industry has recently synergized with academia, where many data science related institutes and programs are established. Speaking from my personal experience, I am involved with two of such institutions in the University of California system. At UC San Diego, a brand new data science institute cradles a novel ecosystem where talents in methodology, theory, and domain sciences coalesce to take on some of the most daunting challenges in the scientific and technological worlds. At UC Berkeley, a new division of data science breaks the boundary between schools of science and engineering.

As a graduate from the Applied Mathematics department at the University of Washington, I often reflect on how applied mathematics can best help students develop their technical backgrounds for this era of data science. After all, it is the very tradition of applied mathematics to relate the pressing problems from industries and other domain sciences to the elegant language of mathematics. Such an effort often results in better solution or understanding of the difficulties of these challenges.

This reflection of mine always starts with pondering data science as an emerging discipline. Data science is ultimately concerned about the underlying mechanisms that give rise to the large volumes of data. It is often approached via a modeling-inference scheme, where one proposes a class of models and then infers which (ensemble) of the models is closest to the one generating the observations. There are two very different ways this theme has played out. In a traditional applied mathematics setting, large amount of efforts have been devoted to understanding models in specific domain sciences. In principle, if enough prior information can be acquired about a system of interest, one would require little to no data to construct a model and make accurate predictions. This approach has proven extremely efficient in some of the mechanical and electrical systems.

On the other hand, there has also been a vast literature in statistics and computer science focusing on the inference part of the scheme. With little knowledge about the domain sciences, generic models are proposed and trained with huge amount of data. Recent success of such approaches has led to new hopes of AI, a human imitating intelligent system that can handle a variety of tasks via learning from observations or practice. Without delving into the efficacy of this approach, a crucial factor determines whether one can even begin this path: the scalability of the inference algorithms. If an algorithm doesn't scale to the growing complexities of the data and, correspondingly, the models, there is little hope of augmenting knowledge and intelligence via procuring larger data sets.

I have devoted the past few years on understanding and improving the scalability of various inference algorithms in the face of growing data and model complexities. I would like to share some of my personal experience in drawing inspiration from my applied mathematics background to understand both the intrinsic difficulty and the methodological efficiency for some of the algorithm design problems. Along the line, I have had a few "a-ha" moments when some of the more abstract mathematical concepts greatly advanced my understanding of the inferential tasks and procedures.

One moment happened when I was trying to understand the relative difficulty of learning an ensemble (or distribution) of models versus finding a single most-likely one. The distribution-based approach benefits from the fact that an entire ensemble automatically encapsulates the uncertainty information about the learned model given the observations. It has however been criticized of being computationally costly when it comes to large model classes and data sets. Intuitively, this is because we need to navigate through the space of probabilities, an infinite dimensional non-Euclidean space, to quantify a target distribution. On the other hand, I have long suspected that in certain cases, this approach can actually alleviate the challenge of complex local structures and focus more on the global properties of the systems of interest. After contemplating the intrinsic difficulty of learning a distribution of models, I realized that it was determined by the properties of a class of functional spaces---the Sobolev spaces, in particular---which overlooks some of the local details. This fact immediately struck me that local complexities would not be as virulent in learning smooth distributions as compared to obtaining point estimates. Following this intuition, I was able to confirm my suspicion that an ensemble of models can actually be learned significantly faster than just a specific one.

Another moment happened when I was trying to accelerate the learning of models via designing algorithms that converge fast. When an algorithm "learns" a model, it starts from an initial guess and iteratively refines its belief to better describe the data. It converges when a certain fidelity criteria is met. Design of such an iterative procedure corresponds to the search for a trajectory in the space of all possible models. A natural plan of attack for an applied mathematician is to adopt a continuous dynamical orbit with the desired stationarity and then discretize it to obtain a practical algorithm. To accelerate the convergence, one off-the-shelf method within this strategy is to increase the numerical accuracy of the discretization method. This route, however, can lead to the hurdle of stability-accuracy tradeoff, where higher order of accuracy is accompanied by smaller stability region which, in turn, restricts the actual convergence rate. But then I realize that this predicament might not appear if we tailor the continuous dynamics for the benefit of discretization. In particular, when we make the continuous orbit smoother, we are able to increase numerical accuracy without having to sacrifice stability. This leads to an entirely new direction of inventing novel dynamical systems with the desired long term behaviors. Symplectic structures in the continuous dynamics turn out crucial to the design of fast converging algorithms.

During these episodes of exploration, I have had many revelations of how objects in applied mathematics are quintessential to solving data science problems. From an algorithmic perspective, some directions in applied mathematics are particularly prominent in making their ways to advancing inferential methodologies. Perhaps not very surprisingly, dynamical systems and stochastic processes lie at the center of this endeavor. They provide elegant abstraction and simplification for various algorithms and facilitates geometric and physical intuitions. Supporting these intuitions are the concepts in (functional and convex) analyses that provide the basic toolkit to study convergence properties of the algorithms. In terms of designing the algorithms, numerical analysis provides a set of existing choices as well as directions to invent new ones. All of these examples have been invigorating and have given me hope that many more fields in applied mathematics would bolster future developments of data science.