By Bamdad Hosseini

I joined the Department of Applied Mathematics (Amath) at University of Washington (UW) as an Assistant Professor in October of 2021. In celebration of this event and as the new junior faculty in the department I was invited to write this article for the department’s newsletter. I decided to use this opportunity to introduce myself to our community and in particular to those that I have not yet interacted with. I will start with an informal section that summarizes my background and reflects on my first year at UW followed by some research ideas in the second section.

1 My background and the first year at UW

1.1 A short bio

I started my academic career as a mechanical engineer at Sharif University of Technology in Iran. I never came to terms with the pragmatic philosophy of engineering and decided to work on something in natural sciences. After taking a few graduate level courses on computational fluid dynamics and numerical analysis I realized that I wanted to be an applied mathematician.

I joined the Masters program in Applied and Computational Mathematics at Simon Fraser University (SFU) in 2011. This was the first time I heard about the UW Amath department from my friends. Later I spent some quality time with Randy LeVeque’s books [7, 6] and ended up using CLAWPACK to create a 3D model of the dispersion of pollutants in the atmosphere for my masters thesis [2].

An industrial internship introduced me to the fields of inverse problems and uncertainty quantification (UQ) which were on the rise at the time. This led me to pursue a PhD in Bayesian Inverse Problems (BIPs) at SFU in 2013. I became obsessed with applied probability and measure theory and my work became progressively more theoretical. I obtained my PhD in 2018, with my thesis work focusing on computational and theoretical aspects of BIPs [3].

I joined California Institute of Technology as a Postdoctoral fellow in 2018. Machine Learning (ML) and more broadly Data Science (DS) were already popular at this point and I realized many commonalities between ML and BIPs/UQ. This enabled me to branch out into ML and DS where, once again I came across the work of the UW Amath community. In particular, Nathan Kutz, Steve Brunton, and Eli Shlizerman as well as other faculty in the Math, Statistics and Computer Science and Engineering departments.

This brings us to October of 2021 when I joined the Amath department. As I mentioned above I already had great admiration for the department and more broadly UW. It is no secret that the academic career is a difficult path to travel with many sacrifices and compromises along the way. For this, I can only feel a sense of gratitude, to end up at an institute that I regard so highly and to work among some of the top researchers in the country. It also helps that I love Seattle and the broader Pacific Northwest area.

1.2 Reflections on the past year

The highlight of my first year at UW has been the interactions with the graduate students in the Amath program. I was approached by our graduate students a lot faster than I had anticipated and I was truly surprised with their quality, motivation, and curiosity. I am currently advising three PhD students: Alexander Hsu, Biraj Pandey, and Gary Zhao, they are all working on problems in vicinity to the research topics discussed below. I am also working with other students from our department including Nicole Nesbihal, Juan Felipe Osorio Ramirez, Obinna Ukogu and others.

Cross-campus collaborations and exciting new research projects were a most welcome development. I joined the Kantorovich Initiative and formed collaborations with many of the affiliated faculty such as Zaid Harchaoui (Stats), Stefan Steinerberger (Math), Amir Taghvaei (Aero & Astro), and Soumik Pal (Math). I also benefited from many activities organized by the Institute for Foundations of Data Science, AI Institute for Dynamic Systems, and the Computational Neuroscience Center. The prospect of future collaborations and research projects at UW is truly exhilarating.

Moving between the different stages of one’s career is always accompanied with growing pains but the support and advice of my senior colleagues and our wonderful staff was most welcome. I feel truly honored to be a member of our department.

2 Modern twists on Bayesian inference

Here I will discuss some research ideas at the intersection of Bayesian inference, ML and optimal transport. The Bayesian approach is one of two dominant paradigms in statistical inference going back to the works of Thomas Bayes. The other one is the so called Frequentist approach; see the book [9] for a detailed comparison of the two approaches and also Cassie Kozyrkov’s blog post.

Our goal is to infer an unknown parameter X given indirect and limited data Y. The unknown X is represented as a random variable with our prior belief about it modeled by a prior probability distribution p(X). The prior is then combined with the observed data Y through Bayes’ rule to give a updated distribution on the unknown X, called the posterior distribution, and denoted by p(X|Y). We often write Bayes’ rule as

p(X|Y) = p(Y|X)p(X)/p(Y)

The term p(Y|X) is called the likelihood distribution and can often be written explicitly based on some model for the data Y. The term p(Y) is called the evidence and is simply a normalizing constant so that the right hand side is a probability distribution, i.e., integrates to 1.

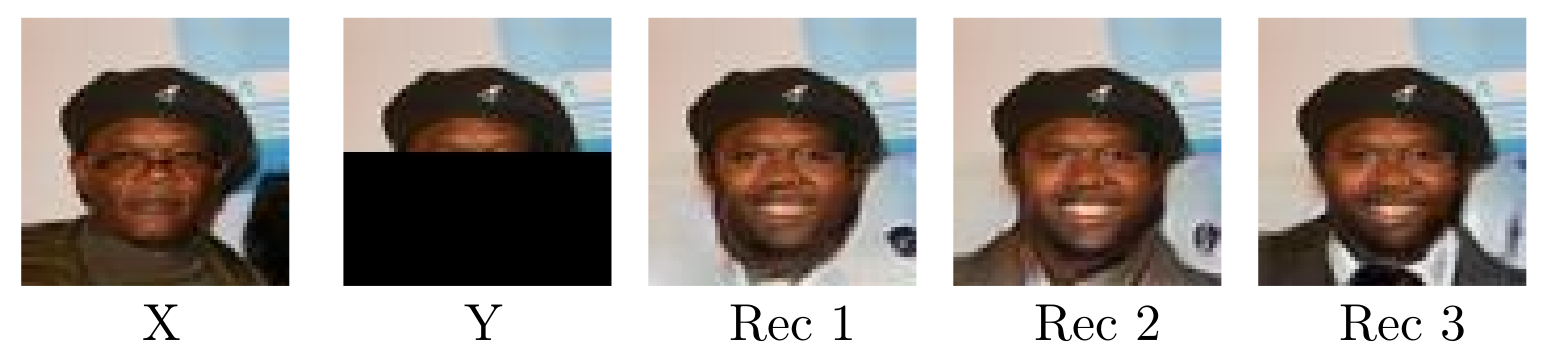

This is a simple enough formula to apply, after all it is only a multiplication of densities. However, the problem becomes interesting when the parameter X is high-dimensional or, even worse, a function which is technically infinite dimensional. As an example, consider the image in-painting problem where we wish to recover an image X given a corrupted version of it Y; see Figure 1 below.

Figure 1: Example of the unknown parameter X and the observed data Y in image in-painting. The image is an instance from the benchmark CelebA data set. Only the top half of the image is observed so a good prior should be constructed that is biased towards images of humans, otherwise we have no hope of recovering X. Rec 1-3 are possible reconstructions using a data-driven prior.

The resulting posterior p(X|Y) is now high dimensional and, save a few exceptions, does not have closed form. This means we often have to give up on representing p(X|Y) directly and resort to computing some of its statistics, i.e., integrals of the form E[h(X)], where E represents the expected value with respect to p(X|Y) and h: X –> R is some test function. This is often a high-dimensional integral so we approximate it using Monte Carlo [8].

Some interesting theoretical questions arise such as well-posedness and stability as well as other perturbation properties of the posterior. The first modern twist on Bayesian inference appears here as the mathematics in the infinite dimensional setting become highly non-trivial; see [4, 10] for example.

In the in-painting example, the data Y is missing a lot of crucial information about the true image X. Therefore, to obtain a photo-realistic reconstruction we need to have a very strong prior that represents the faces of humans. This is where the second twist on Bayesian inference appears as ML techniques are applied to construct such priors from existing data; the so called data-driven techniques. I am interested in understanding and developing such ML techniques from the perspective of optimal transport (OT) [11].

The problem of interest in OT is that of transforming a reference probability distribution q(Z) into a target distribution p(X) using a transport map T: Z -> X in such a way that

T#q = p,

with the equality understood in the sense of measures. The notation T#q denotes the distribution of T(Z) if Z was a random variable with distribution q(Z). As the name suggests the map T is optimal in some sense and has some nice properties; see the book of Villani [11] for details. Once we have the map T we can change variables and rewrite Bayes’ rule as

q(Z|Y) = p(Y|T(Z))q(Z)/q(Y).

The benefit of the above formulation is that now we can use a generic prior q(Z), such as a standard Gaussian which makes the formulation very convenient. At the same time, this formulation gives rise to many exciting theoretical and practical questions: How does the quality of T affect the resulting posterior? Is the problem numerically consistent? how should we construct T in the infinite-dimensional setting? what are the regularity properties of T?

ML techniques such as Generative Adversarial Networks (GANs) [1] and Normalizing Flows (NFs) [5] are convenient computational frameworks for com puting such maps from empirical data but they do not necessarily find OT maps. So it is interesting to understand them from the perspective of OT.

References

1. Ian Goodfellow. “NIPS 2016 tutorial: Generative adversarial networks”. arXiv: 1701.00160. 2016.

2. Bamdad Hosseini. Dispersion of pollutants in the atmosphere: A numerical study. MSc Thesis. 2013.

3. Bamdad Hosseini. Finding beauty in the dissonance: Analysis and applica tions of Bayesian inverse problems. PhD Thesis. 2017.

4. Bamdad Hosseini. “Well-posed Bayesian inverse problems with infinitely divisible and heavy-tailed prior measures”. In: SIAM/ASA Journal on Uncertainty Quantification 5.1 (2017), pp. 1024–1060.

5. Ivan Kobyzev, Simon JD Prince, and Marcus A Brubaker. “Normalizing flows: An introduction and review of current methods”. In: IEEE transac tions on pattern analysis and machine intelligence 43.11 (2020), pp. 3964– 3979.

6. Randall J LeVeque. Finite difference methods for ordinary and partial differential equations: Steady-state and time-dependent problems. SIAM, 2007.

7. Randall J LeVeque. Finite volume methods for hyperbolic problems. Cam bridge University Press, 2002.

8. Jun S Liu. Monte Carlo strategies in scientific computing. Springer, 2001.

9. Francisco J Samaniego. A comparison of the Bayesian and frequentist approaches to estimation. Springer, 2010.

10. Andrew M Stuart. “Inverse problems: A Bayesian perspective”. In: Acta numerica 19 (2010), pp. 451–559.

11. Cédric Villani. Optimal transport: Old and new. Springer, 2009. 5